Is This How the Strikeout Era Ends?

How the 2024 playoffs could change modern pitching philosophy

The game of baseball looks quite different than it did a generation ago. As some core truths about strikeouts became accepted dogma throughout the league — that pitchers were better off chasing them than trying to induce weak contact, that hitters should stomach them in service of hitting for power, that teams could identify and optimize for the subtle ball-trajectory indicators that predict them — the sport reshaped itself in the image of swinging and missing. Pitchers are throwing harder. Chase-pitch sweepers replaced get-me-over sinkers. The max-effort approach tires starters out faster, and managers mindful of the times-through-the-order penalty have quicker hooks. That leaves more outs in the hands of ever-nastier bullpens, whose most-unhittable members are increasingly called upon based on matchups and leverage instead of just inning number. Especially in the playoffs, when the high stakes and more-frequent off-days give teams extra incentive to punt durability in the name of maximum filth.

The league has been wringing its hands over these trends for my entire adult life. It’s true that more strikeouts means less action, at least in the sense of seeing players run after the ball and around the bases. But as I’ve been arguing for over a decade, the narrative that baseball is less interesting is partly a self-fulfilling prophecy — the chess match between the batter and the pitcher and the fruits of the arms race to throw nastier pitchers can be plenty entertaining when you’re not constantly hearing that the game used to be better. Moreover, while I think it’s possible to subtly incentivize balls in play via rule changes, Major League Baseball’s proposals for how to do so have mostly missed the mark, ranging from drastic overcorrections to actually increasing strikeouts across the league.

The best and ultimately only lasting way to staunch the tide of swing-and-miss would be to discover a state of strategic disequilibrium: that pitchers have more to gain from pacing themselves and pitching to contact than treating every at-bat like it’s the ninth inning. We haven’t seen much evidence of that in recent years. Or at least we hadn’t.

The 2024 MLB postseason was notable both for how aggressively teams unleashed their best bullpen arms, and how often they got shelled. Across the league, relievers threw 52 percent of the innings, the second-highest proportion in history and just the fourth time ever when the bullpens collectively outworked the rotations. Pitchers blew a record-setting 17 saves — more than the previous two Octobers combined. The 1.5 bullpen meltdowns per game were also the most in playoff history, a stark increase from this year’s 1.1 regular-season average. As I watched these parades of relief aces get lit up in the biggest games of the season, I wondered if we had reached an inflection point in the pursuit of strikeouts. Now that I’ve dug into the data, I suspect we have.

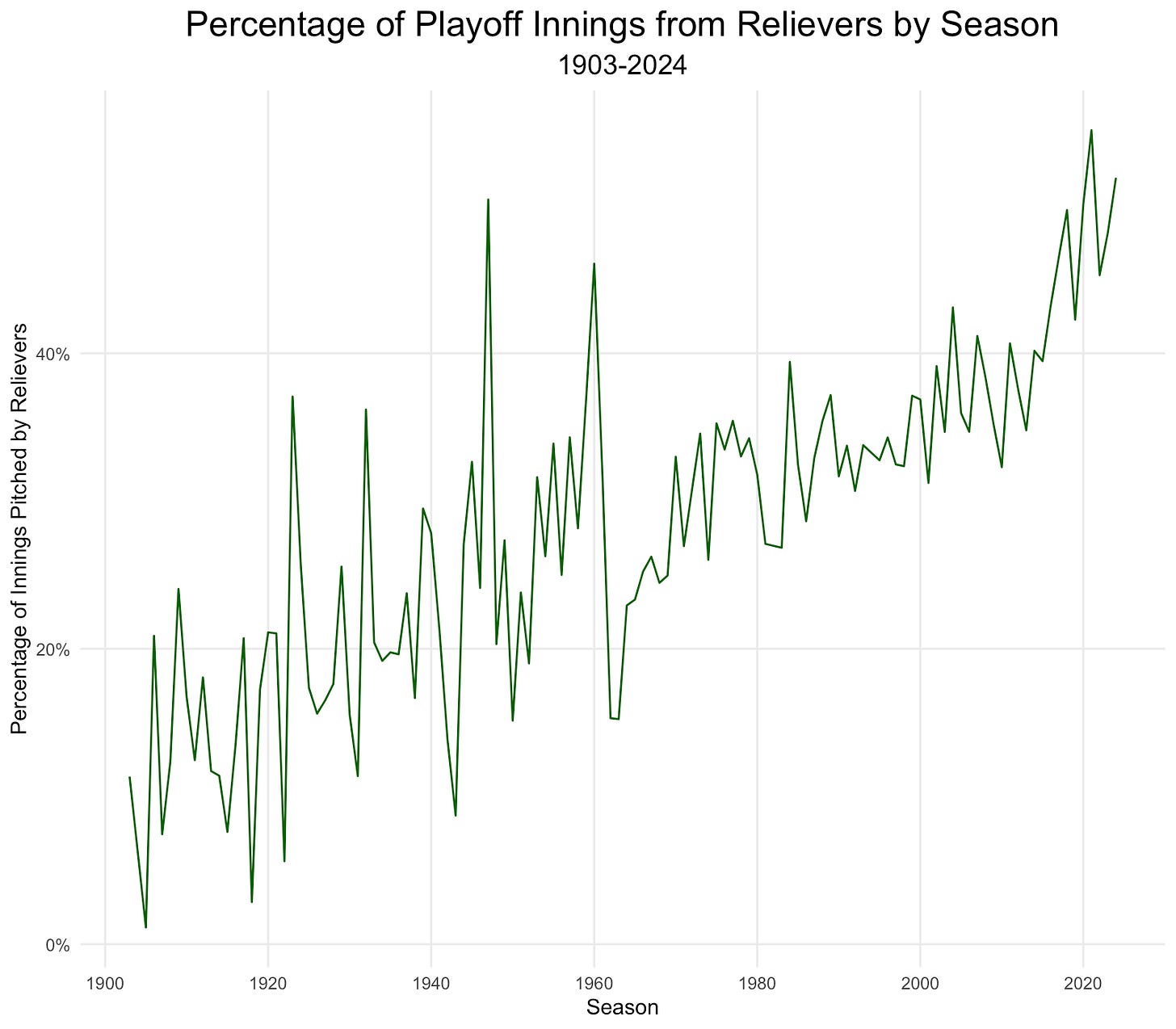

I associate the modern approach to October pitching with the 2014 Kansas City Royals. The underdog Royals were known for playing old-school small ball, yet their bullpen approach was ahead of their time. Each appendage of the vaunted “Three-Headed Monster” of Kelvin Herrera, Wade Davis, and Greg Holland appeared in 11 or more of Kansas City’s 15 postseason games. Their seventh-inning guy faced as many batters as their third starter. Across the league, it was only the sixth time in AL/NL history when relievers threw at least 40 percent of the innings.1 To wit, while the bullpens’ share of playoff workloads has been steadily increasing for a century, the trend accelerated about a decade ago:2

It’s not just about how much teams are relying on their relievers, but whom specifically they are turning to. For example, the New York Yankees tasked their bullpen with throwing 60.2 innings this October, and though they called on nine relievers over the course of the postseason, Clay Holmes and Luke Weaver combined to throw 45 percent of them. Only eight starting pitchers in the 2024 playoffs threw more innings than Weaver did.

As a way to quantify this, I created a toy stat I am calling expected playoff ERA (xpERA): the league pitching line we would expect for the postseason given how each pitcher performed during the regular season and how much they threw in the playoffs. In other words, it’s an average of in-season ERAs weighted by postseason batters faced. I calculated xpERA for every year since the playoffs expanded beyond two rounds in 1995. This is an extremely oversimplified attempt to quantify the quality of playoff pitching — it does not account for park factors, seasonal run environments, batted-ball and sequencing luck, regression to the mean, specific matchups, end-of-season exhaustion, or changing roles in the postseason — but it seemed a reasonable proxy for how hard teams leaned on their best arms each October.

The median xpERA in the Wild Card Era is 3.37. In 2024, it was 3.11. That’s the sixth-lowest on record, and 24 percent better than 2024’s regular-season baseline of 4.08. Fourteen of the 15 lowest years have come since 2010.3 If you accept my premise that the modern postseason strategy of funneling as many innings as possible to your top pitchers started coming into vogue around a decade ago, then this metric passes the sniff test. And while the relationship between xpERA and actual postseason ERA is noisy, it is obvious both conceptually and visibly.

The red dot on that chart is 2024, which confirms what you have probably surmised: the run environment in this year’s playoffs was more hitter-friendly than you’d expect given who was on the mound. Directionally this is normal, as pitchers face definitionally better-than-average competition in the postseason. But the 86-point gap between this year’s 3.11 xpERA and the observed 3.97 was exactly double the median difference in the Wild Card Era. It’s the eighth time in 30 years that we’ve seen a delta of more than 80 points, so it’s hardly unprecedented. (Though it’s notable that it’s happened thrice since 2020 after occurring in only five postseasons from 1995-2019.) Yet that undersells how unusual this year was.

In the same vein as xpERA, I calculated xpOPS — the expected OPS by year, based on which hitters were getting October at-bats — and plotted playoff-ERA-above-projected against it. You may expect these metrics to be correlated, as it’s harder to pitch against better hitters. This year (the red dot again) represents a point of Pareto efficiency: no other postseason in the Wild Card Era has seen pitchers underperform to this degree against batters of this quality (.711 xpOPS) or worse. Among the seven other years with an xpOPS below .780, only one (2014) saw pitchers regress more dramatically in October.

We can look at this from the other side, too. Hitters underperformed their xpOPS by 71 points in the 2024 playoffs, though that’s a less-dramatic dropoff than the median of 95 points. With only one exception (2014 again), no other postseason in the last 30 years has featured hitters holding their own so well against such high-quality arms, or pitchers this good failing to dampen opposing bats more thoroughly.

I can’t prove why 2024 was unusual in this regard, nor that it’s even truly an outlier in the grand scheme of things. But I’m convinced it is a sign that we have finally found the upper limit for how hard teams can lean on their back-end arms before it ceases to be advantageous.

We know that relievers are more prone to fluctuations in effectiveness than starters, that they are not as fresh when they pitch multiple days in a row, and that they become more hittable as batters get more looks at them. It’s widely understood that warming up in the bullpen is taxing for a pitcher, even when they do not enter the game. If a reliever is used to getting loose in the late innings, is it farfetched to think that having them be on call for the first sign of trouble may be a subtle source of fatigue?

Indeed, in all the exploratory noodling I did with xpERA, the single strongest relationship I identified was between pitcher underperformance and the proportion of playoff innings allocated to relievers. With the enormous caveat that this may get the causal relationship backward for past seasons — once upon a time, pulling the starting pitcher early was a sign that they had already given up a bunch of runs, not that managers wanted to get to their bullpens as soon as possible — there is a clear correlation between bullpen use and relative run-prevention. After controlling for quality of talent, a postseason-wide shift of one inning per game from starters to relievers is associated with a 47-point increase in ERA.

If teams’ increasing reliance on their top relievers in the playoffs is indeed backfiring, there are three possible ways for them to adjust. First and simplest, managers could distribute the October workloads more evenly. Did the Cleveland Guardians really need Emmanuel Clase to close out their 7-0 win in Game 1 of the ALDS? It’s facile to draw a line between this unnecessary outing and the normally lights-out Clase coughing up a game-winning homer in Game 2, but it’s hard to shake the what-if. Of course this is easier said than done. No one wants to watch their team’s middle reliever blow a playoff game while the closer sits idly in the bullpen.

A second possibility is that managers could try to keep their back-end relievers fresher. This could mean using them more sparingly during the regular season, yet going much beyond teams’ existing caution with bullpen workloads could create prohibitive roster inflexibility, and bubble-wrapping your best players for the playoffs means risking not even getting there. Alternatively, they could dial back their intensity to reduce fatigue, but pitching mechanics aren’t always conducive to modulating effort, and doing so would inhibit the baseline dominance that makes them relief aces in the first place.

Which brings us to the third and most-interesting option. If teams want to avoid stretching their bullpens too thin in October, the obvious solution would be to leave their starters in longer. To plan on letting their aces pitch deep into games, to tolerate their mid-rotation arms going a third time through the order, to not pull their Game 4 starter at the first sign of trouble. It would mean seeing durability in the postseason as a priority, not just an incidental luxury. Keeping the pitch count low, turning over a lineup multiple times, and pacing yourself through the middle innings may be seen as more-valuable skills than dominating for three innings at a time.

If Spahn and Sain and a bullpen game were no longer enough for a playoff rotation, teams with championship aspirations would have to develop or acquire some legitimate old-school starters. The ability to pitch deep into games is not something that most players can just pick up at the end of September.4 So as teams placed renewed importance on being able to throw six or seven innings each time out, the pitching approach you’d expect from such innings-eaters would make a comeback during the regular season, too: lower velocities, more pitches in the zone, and most importantly, fewer strikeouts. Baseball could start to turn back the clock.

None of this would happen overnight. One month’s worth of suggestive evidence does not negate the decades of strategic evolution that begat the modern game. It would take time to develop the next generation of Paul Byrds and Jake Westbrooks. Maybe a year from now I’ll reread this article and cringe while Weaver or Clase exhaustedly hoists his World Series MVP trophy after throwing 30 scoreless playoff innings. Still, I suspect some of the countless club analysts constantly searching for competitive advantages have already made this same connection. If elite relievers struggle so conspicuously again in an upcoming postseason — or if some enterprising team preemptively zags and rides their innings-eaters and a well-rested bullpen to a deep October run — every team will connect the dots. I can’t predict how far back the balance of the game would shift, or what innovations would come from combining modern pitch design with an old-school emphasis on durability. But some years from now, we may look back on 2024 as the beginning of the end of the strikeout era.

Here and throughout this article, pitcher categorizations are based on who was on the mound at the start of the game, regardless of their usual role or whether they were the planned opener for a long reliever.

If you’re wondering, since I would be — that big spike in the middle is 1947, which doesn’t get talked about much but was one of the wildest World Series in MLB history.

The only one missing is 2023, when Brandon Pfaadt faced the seventh-most playoff hitters after getting shelled for a 5.72 ERA in the regular season.

Consider how slowly pitchers ramp up to full-length outings over the course of Spring Training, and even into the start of the regular season.

“Though it’s notable that it’s happened thrice since 2020 after occurring in only five postseasons from 1995-2019.) Yet that undersells how unusual this year was.”

Seems like it might be worth investigating how much the extra round of playoffs is influencing this.

I think there are some things MLB could do to change the equilibrium, in both the regular season and playoffs. Restrictions on active roster size or the ability to option relievers frequently would be regular season examples. And in the playoffs, fewer offdays would be the solution. My suggestion to achieve that would be a large expansion that allows for more geographic alignment in the early playoff rounds.

Interesting and thought provoking. Thanks!

Can't a lot of this just be chalked up to the fact that the top-2 offenses (by wRC+) made it to the World Series and thus 25 of 43 postseason games involved one or both of these offenses?